Want to know how much website downtime costs, and the impact it can have on your business?

Find out everything you need to know in our new uptime monitoring whitepaper 2021

[lead]As a direct result of feedback received from our users in the latest customer Survey (January 2016) we’ve been busy working on the features and improvements you’ve asked for. Today we’re happy to announce the first of these new features; ‘Page Speed Monitoring’.[/lead]

This new feature is being offered to all our paid users, and with no limits so if you already have a paid plan you can start using Page Speed Monitoring right away

StatusCake Page Speed Monitoring uses a chrome instance and loads all the content from your site, external and internal. This means what you see is what your customers get. Each test is performed using dedicated bandwidth resources at 250kb/s.

We know that uptime is only half the battle, if your site isn’t performing how it should be then you’ll lose visitors, and even more importantly your brand reputation will be damaged” – Daniel Clarke, StatusCake CTO

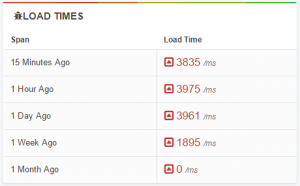

We store all page load times indefinitely for all users so you can dive into your historic performance to see if you’re moving in the right direction. You can dive in and find out the load time, page size, requests made (and then drill into each request) and even the content type distribution.

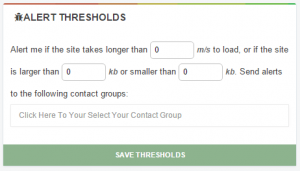

Of course being able to dive into historic data is only half the battle – StatusCake Page Speed Monitoring allows you to set trigger thresholds on metrics such as page load time and Page Size and then get alerted via your pre-existing contact groups.

[themo_button text=”Give Page Speed a Try, Sign Up Now” url=”https://www.statuscake.com/pagespeed-monitoring” type=”standard”]

Share this

4 min read In the previous post, we looked at what happens after detection; when incidents stop being purely technical problems and become human ones, with cognitive load as the real constraint. This post assumes that context. The question here is simpler and more practical. What actually helps teams think clearly and act well once things are already

3 min read In the previous post, we explored how AI accelerates delivery and compresses the time between change and user impact. As velocity increases, knowing that something has gone wrong before users do becomes a critical capability. But detection is only the beginning. Once alerts fire and dashboards light up, humans still have to interpret what’s happening,

5 min read In a recent post, I argued that AI doesn’t fix weak engineering processes; rather it amplifies them. Strong review practices, clear ownership, and solid fundamentals still matter just as much when code is AI-assisted as when it’s not. That post sparked a follow-up question in the comments that’s worth sitting with: With AI speeding things

4 min read Why strong reviews, accountability, and monitoring matter more in an AI-assisted world Artificial intelligence has become the latest fault line in software development. For some teams, it’s an obvious productivity multiplier. For others, it’s viewed with suspicion. A source of low-quality code, unreviewable pull requests, and latent production risk. One concern we hear frequently goes

3 min read IPFS is a game-changer for decentralised storage and the future of the web, but it still requires active monitoring to ensure everything runs smoothly.

3 min read For any web developer, DevTools provides an irreplaceable aid to debugging code in all common browsers. Both Safari and Firefox offer great solutions in terms of developer tools, however in this post I will be talking about the highlights of the most recent features in my personal favourite browser for coding, Chrome DevTools. For something

Find out everything you need to know in our new uptime monitoring whitepaper 2021